在MDP中,action不仅影响immediatereward,也影响未来的reward。3.1TheAgent-Envi...

3.1 The Agent-Environment Interface

Dynamics of finite MDP:3.2 Goals and Rewards

reward指明goal,但是不能给agent prior knowledge,比如下围棋,赢了一局给1分,但是agent并不知道该怎么下。what you want it to achieve, not how you want it achieved。3.3 Returns and Episodes

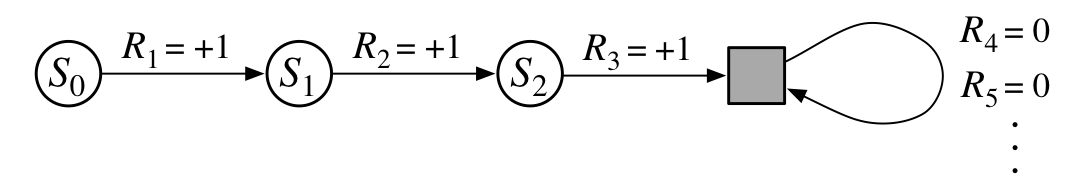

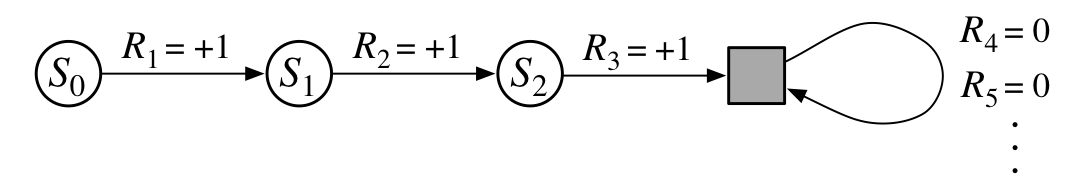

episodic tasks:有terminal states,cumulative reward直接加起来就好。continuing tasks:更多RL问题没有terminal states,一直跑啊跑啊跑啊,于是用discounting来计算discounted return,3.4 Unified Notation for Episodic and Continuing Tasks

用abosorbing state 将episodic tasks 和continuing tasks结合,

于是episodic tasks的reward变成

于是episodic tasks的reward变成 3.5 Policies and Value Functions

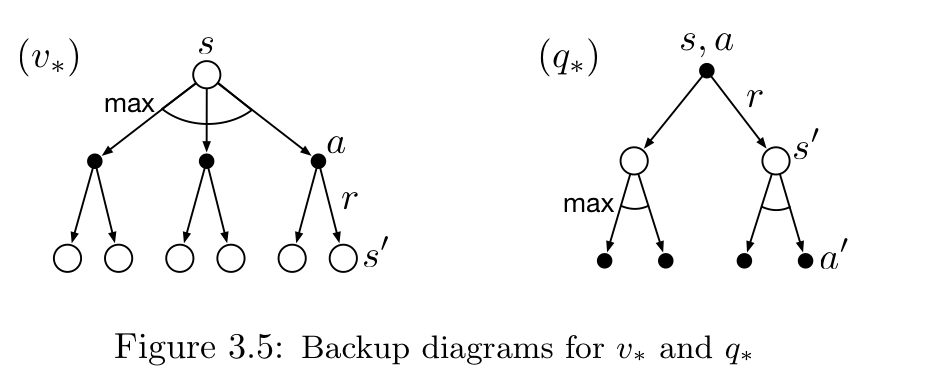

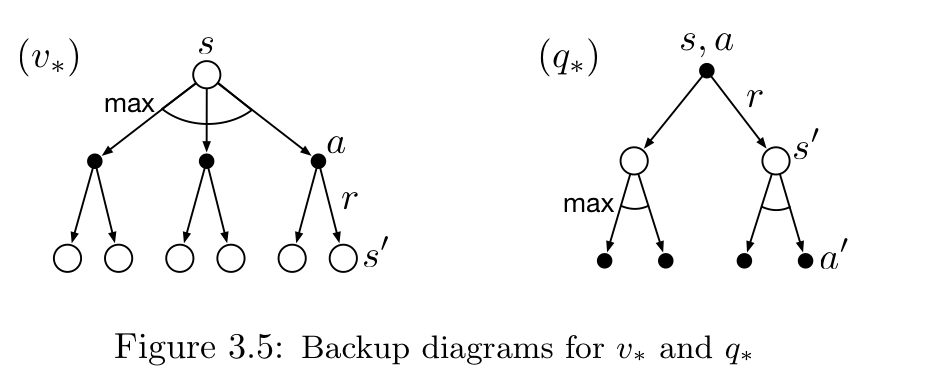

value function: how good it is for the agent to be in a given state (or how good it is to perform a given action in a given state).policy3.6 Optimal Policies and Optimal Value Functions

3.7 Optimality and Approximation

算力不够,时间不够,memory不够,必须用approximation。而且可能存在很多很少见的states,approximation不需要在这些states上做出很好的选择。这也是RL和其他解决MDPs方法一个主要区别。3.8 Summary

本文标题: Intro to RL Chapter 3: Finite Markov Decision Process

本文地址: http://www.lzmy123.com/duhougan/135350.html

如果认为本文对您有所帮助请赞助本站